- Politics, Institutions, and Public Opinion

In the scant arsenal of the historian, the concept of the century has an outsized place. A historian's century needn't strictly adhere to the dictates of the calendar: Most of them, for instance, incline to the view that the twentieth century began with the outbreak of World War One in 1914. (Though there is the unsettling fact that by the time the novelist Henry James died in 1916, he had acquired electric lighting, rode a bicycle, wrote on a typewrirter, saw a movie, and could have had a Freudian analysis, flown in a plane, and understood the principles of the jet engine and space travel.)

However defined, one of the distinctive features of the twentieth century was the rise of the large, regulatory, welfare-warfare state to become the default model for governance, replacing the monarchical-aristocratic and laissez faire-liberal polities that were the nineteenth century's norms.

Large-scale historical changes spurred the transformation: the rise of industrial economies; popular nationalism and cultural unification; and the ever-expanding arsenal of bureaucratic, military, and economic policies that fostered the growth of government. New technology and forms of political, social, and economic control intertwined to foster scale and centralization.

The twentieth-century state took a variety of forms. Hitler's Germany, Lenin and Stalin's Soviet Union, and Mao's China rose and fell in their distinctively destructive fashions. Tinhorn versions of the totalitarian model cropped up in Cuba, North Korea, Vietnam, and Cambodia. Authoritarian kleptocracies became the norm in much of Latin America, the Middle East, and post-colonial Africa. Most of Western Europe along with its Canadian and Australasian outliers adhered to a third, far more benign model of representative democracy. But whatever the form, the trend line was the growth of the big-spending state. Between 1870 and 2007, government spending as a portion of national income rose from 9.4 to 44.6 percent in Great Britain, from 10 to 43.9 percent in Germany, and from 12.6 to 52.6 percent in France.

And then there is the United States, in so many ways the West's outlier. It had no history of feudalism, aristocracy, or monarchy, and came up with the first large liberal-democratic polity. But this heritage had its costs. American democracy, individualism, and voluntary association went hand in hand with American slavery, which flourished even as abolition took hold elsewhere in the Western world. And Southern secessionism, slavery’s close companion, rose while unification became the norm in Western Europe, notably in Germany and Italy. What historian David Donald called “an excess of democracy”—the freedom of Americans to create their own forms of social organization, from religion and labor to politics and government—fed the nation's greatest social and political failures as well as its triumphs.

It is not surprising, then, that America’s twentieth century welfare-warfare state marched to a slower-paced drummer than did its European counterparts. The United States always lagged behind Western Europe in its commitment to big-time social welfare. And until the 1940s it was by European standards dilatory (though by no means lacking) in its readiness to engage in big-time warfare.

Still, over the course of the twentieth century America's trajectory roughly tracked those of the major European states. The Federal government's slice of national income rose from 7.3 percent in 1870 to 36.6 percent in 2007. The New Deal was a kissing cousin of European social democracy. In their own initially reluctant and then ebullient, enthusiastic way, the American people bellied up to the bars of World Wars I and II. Far less bashfully, the nation assumed the leading role in the Cold War and the War on Terror.

The Rise of the Modern American State

America's first broad exposure to the large, active national state (aside from the transient Civil War and Reconstruction years) came in the first two decades of the twentieth century. While influenced by contemporary European models, the new American state had its own distinctive features. Europe's states were in thrall to their feudal, aristocratic, and monarchical past, on the one hand, and their socialist-colored present, on the other. Continental welfare states tended to be the product of a mix of top-down noblesse oblige, venerable Guild traditions, and popular new social democratic agendas. Only England had as well the humanitarian and evangelical strand that occupied so prominent a place in American social reform. And no nation matched the American impulse, born not of socialism but of its individualistic tradition, to break up large corporate enterprises. Cartels, not antitrust, were the European norm.

American Progressives were of two minds about how to change the shape of their society. They sought to carve back the power of regnant party bosses and machines. But while some of them favored more direct, popular democracy, others were drawn to social engineering and government by technocrats. As always and everywhere, educated elites had a strong preference for governance by . . . educated elites. Many of them believed in the purifying political potential of enabling women to vote. Equally attractive (often to the same people) was the purifying potential of making it harder for blacks and immigrants to vote. Some took that impulse all the way to the lure of eugenics.

Progressives were inconsistent as well in their response to the rise of big business. The antitrust movement sought to break up over-large corporations (too big to succeed?). Others wanted to keep the benefits of concentrated business and finance (too big to fail?), but subject to government oversight. That difference more or less summarizes the plot of the 1912 election: Wilson's New Freedom emphasized the reduction of big business, Roosevelt its regulation.

A similar eclecticism characterized Progressive social reform. There was commendable interest in improving the living and working conditions of the heavily immigrant industrial population. But this humanitarian concern coexisted with xenophobic and social authoritarian impulses. The two most substantial social “reforms” of the Progressive era were Prohibition and racism-infused immigration restriction based on national quotas.

A messianic theme colored Progressive perceptions of America’s relationship with the outside world—not so much Kipling's White Man's Burden, but a more chauvinistic White Americans’ Burden. Ideological and territorial outreach merged seamlessly with commercial and financial self-interest. The United States got its warfare-state feet damp with a "splendid little war" against Spain in 1898: a cut-rate exercise in European-style imperialism. And then, during World War I, the nation underwent a short but thorough immersion in big-war militarization, mobilization and repression of dissent.

Progressive foreign policy ranged from the “he kept us out of war” neutrality of Woodrow Wilson’s first term to the “war to end all war” interventionism of his second term. In its ultimate application, Wilson sought to create in the League of Nations an international public agency with the capacity to enforce world peace: to export the ideal of Progressive reform to the world.

The impulse to replace nineteenth-century American laissez faire with a more purposeful, efficient, elite-led reform agenda went beyond the airy domains of national domestic and foreign policy and into the nitty-gritty of state and city public life. Iron triangles of political bosses and machines, contractors and corporations, and corrupt governors, mayors and legislators had taken hold during the course of the nineteenth century. At the turn of the twentieth there arose in response a burst of media exposure (“muckraking”), a popular political reaction, and a substantial body of reform: the Progressive movement at play in the nation's cities and states as well as in the national state.

The Progressive record was a large and transformative one. But from the perspective of a century later, it was mere prologue. FDR's New Deal, like FDR himself, was grounded in the Progressive model. Indeed, much of the First New Deal drew inspiration and legitimacy from America's World War I mobilization. But the 1930s and 1940s were vastly different from the early twentieth century. The responses to the Great Depression and World War II were massive national undertakings, comparable only to the Civil War.

In its largest sense the New Deal was a response to the great crisis of a mature urban-industrial society, as the Progressive movement responded to that society's emergence. It created the rudiments of a welfare state; it smoothed the entry of new immigrants and industrial workers into the American political mainstream; and it brought the Federal government to the forefront of American public life.

World War II had far deeper economic, social and cultural consequences than its World War I predecessor. The earlier conflict exacerbated ethnic and racial tensions; the later one began the process of easing them. It also set the stage for America's leadership role in the Cold War geopolitics that followed, and then into the War on Terror.

The civil rights revolution and Lyndon Johnson's Great Society reinforced the model of an ever-expanding national state set in motion by the New Deal and World War II. True, traditional, small-government beliefs persisted, most notably in the rhetoric (more than in the actual policies) of Presidents Eisenhower and Reagan. But while Eisenhower's Interstate, Kennedy's space program, Johnson's Medicare, Nixon's EPA and OSHA, and George W. Bush’s prescription drugs extension of Medicare may not have added up to a seamless program of reform, they had the common quality of extending the Federal presence in American life.

The American polity came increasingly to be a regulatory-welfare-warfare state. Complexes, in both the organizational and attitudinal senses of that word, grew like weeds: military-industrial, welfare-educational-environmental; security-intelligence. By the late twentieth and early twenty-first century, the American state governed more, spent more, and intervened abroad more, by some orders of magnitude, than did its early-twentieth century predecessor.

The Stall of the Modern American State

In recent years American governance has been afflicted by an apparently systemic crisis of overcommitted objectives and declining effectiveness. This is hardly unique to the United States. Mounting welfare costs, debt, recession, and debate over whether austerity or stimulus is the proper remedy flourish in Europe and Japan as well.

The European Union gives signs of living up to Gandhi's characterization of Western civilization as “a nice idea.” EU “subsidiarity” has recurring appeal, favoring local over national or international-based policies and voluntarism over bureaucracy. Separatism, xenophobia and anti-EU sentiment are alive and well, and growing. Developments in Scandinavia, long the poster child of the social democratic state, are a sign of changing times. In recent years that region has turned to a more mixed model. Generous social benefits coexist with experiments in reduced deficits and taxation, and much privatization in education and health care.

The American welfare state, like its European counterpart, suffers from rising levels of inefficacy, debt, and bureaucratic turpitude. The achievements of postwar prosperity and the civil rights movement are threatened by the discontents of a halting economy and growing concern over economic and social inequality, the condition of public education, and the state of the American family. The triumphs of World War II and the Cold War have been succeeded by the frustrations of trying to cope with militant Islam and, more recently, a resurgent if still weak Russia and an ever-stronger, muscle-flexing China. Wherever one looks, the American state still is dominant. But everywhere one looks, dissatisfaction with its works is rife.

The resurgence of American distrust of government has a growing place in the nation's political dialogue. Gallup reports that over the past five years 35–45 percent of Americans have reported little or no confidence in their national government—close to the European average. In September 2013, 60 percent of Americans, a record level, thought the Federal government had too much power. The big-state, social-democratic model faces a growing challenge, just as “anything goes” capitalism did in the first half of the twentieth century.

For some time now, building on the ur-scenarios of Friedrich Hayek and Milton Friedman, the case for a smaller state and more locally grounded institutions has attracted intellectual and theoretical support. Examples include Mancur Olson's near-canonical The Logic of Collective Action (1965), E. F. Schumacher's Small Is Beautiful (1973), and James Buchanan's public choice theory. Anthropologist and political scientist James C. Scott’s Seeing Like a State (1998) argues that top-down state projects are fatally flawed because they are in the hands of planners and managers remote from the realities of everyday life.

More recently, Walter Russell Mead has argued that the “blue model” (his term for the institutional legacy of twentieth-century progressivism) is failing, and needs to give way to a “liberalism 5.0” that champions smaller, more private, less intrusive instruments conducive to the creation of wealth and more protective of individual freedom. High pop-sociology has seized on this theme, too. Nassim Nicholas Taleb’s Antifragile: Things That Gain from Disorder (2012) celebrates organic, bottom-up ways of doing things as against the “Soviet-Harvard delusions” of “top-down policies and contraptions.” Nicco Mele's The End of Big (2013) thinks “the radical connectivity” of the internet and the social networks are “toxic to conventional power structures”, and predicts that “the top-down nation-state model . . . is collapsing.”

Alternatives to the centralized, bureaucratic, interventionist state frequently crop up in American public policy and social practice. Charter schools, vouchers, and home schooling throw down gauntlets to the educational establishment. 3-D printing raises the possibility of a game-changing alternative to the longtime default model of large factories and assembly lines. The internet and its progeny—social networks, online higher education, more direct links between buyer and seller—challenge the primacy of elite expertise, the mainstream media, universities, professionals, corporations, and the bureaucracy.

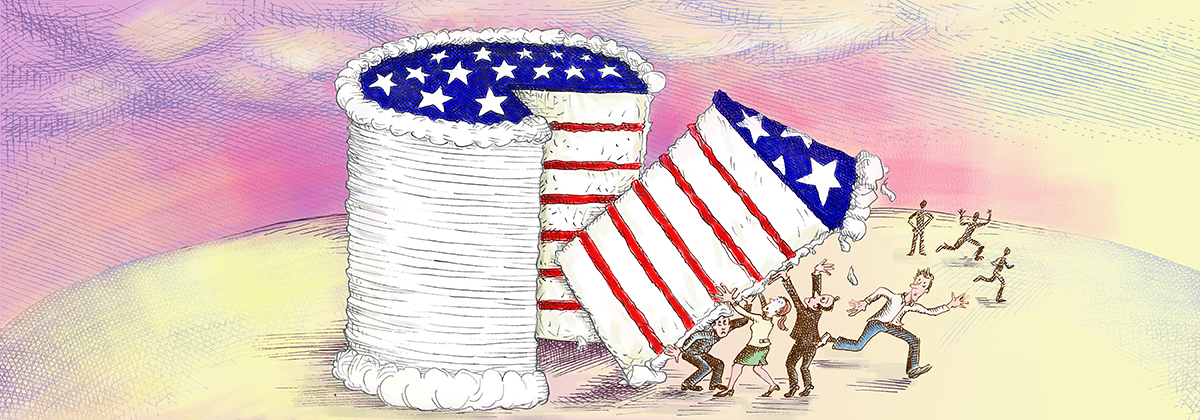

Old ways die hard, and the cake of big government is not easy to slice up. But three recent challenges to the warfare-welfare state may be straws in the American wind. First, American foreign policy appears to be increasingly infected by post Iraq-Afghanistan syndrome (in today's jargon, PIAS). Troop withdrawals, defense budget cuts, and popular distaste for engagement overseas are all the rage. “Leading from behind” is the oxymoronic catchphrase of our time.

On the domestic front, the travails of Obamacare suggest that for the first time in recent memory Americans are coming face to face with a new entitlement they don't like. And a political storm has been gathering over the budgetary costs and pension entitlements of state and city workforces. In three very different policy realms--foreign affairs, social welfare, state and local entitlements--a similar discontent is evident: an anti-progressivism that is in some ways the antithesis of the Progressive movement of a century and more ago.

A New Isolationism?

Until recently the case could be made that the most successful aspect of Obama’s presidency was his foreign policy. Withdrawal from Iraq and Afghanistan, helping (however modestly) to rid Libya of Muammar Qaddafi, non-engagement in the Syrian civil war, and a diplomacy-centered challenge to a nuclear-armed Iran were more popular than his costly, interventionist, bureaucracy-laden domestic initiatives.

Just as America's imperialist venture at the turn of the twentieth century petered out in the face of general indifference, so has the messianic spirit of the World War II-Cold War-War on Terror era shrunk in the face of high human and financial costs and slight returns. George W. Bush's neocon-inspired attempt to link post-9/11 counterterrorism to democratic state-building, his biggest failure, helped curb the popular appetite for quixotic adventures. The popular reaction to the NSA spying revelations is further testimony to the declining appeal of John F. Kennedy's call to “pay any price . . . to assure the survival and the success of liberty.” So, too, is the FY2015 budget proposal to reduce the U.S. Army to its pre-World War II size. This is not a uniquely American response: Europe's distaste for engagement was evident in the British Parliament’s vote against striking the Syrian regime.

The twentieth-century warfare state is shrinking as its fiscal and popular sustenance decline. The prevailing view is that World War II and Cold War policies were appropriate to the state-based aggression of the twentieth century, but less so to the ideological, guerilla agenda of Islamic terrorism today.

True, there are indications that the new isolationism could prove to be as destabilizing as its between-the-wars predecessor in the twentieth century. Putin’s Sudetenlike Crimea intervention, like Assad's Francoite civil war in Syria (with Russia and Iran standing in for Hitler’s Germany and Mussolini's Italy, and Saudi Arabia assuming the role of Stalin’s Russia) are eerie echoes of the 1930s. Iran’s nuclear effort shows little sign of being staunched, either by sanctions or by the current Western diplomatic effort. China today, like Japan in the 1930s, displays a discomfiting desire to impose itself on Southeast Asia.

Still, Obama has not (yet) had to face a decision between peace or war of the sort that confronted Wilson, FDR, Truman, or LBJ, or even a challenge as stark as Bush’s 9/11. Unless or until something like that happens, the prevalent popular distaste for an assertive American role abroad, and Obama's inclination toward that view, shows no sign of abating.

Obamacare: A Noble Experiment?

On the domestic side as well, the active-state model of the twentieth century shows signs of sliding into history's dustbin. Journalist Charles Lane has observed that America "periodically redefines the role of the federal government in society. . . . The post-New Deal consensus about the scope of federal power has broken down amid . . . concern over the welfare state's cost and intrusiveness."

Given its scale and aspirations, Obama's Affordable Health Care Act fits Herbert Hoover's characterization of Prohibition as "a great social and economic experiment, noble in motive and far-reaching in purpose." Bioethicist Ezekial Emanuel, one of Obamacare's architects, predicts that "the ACA will increasingly be seen as a world historical achievement, even more important. . .than Social Security and Medicare has been."

But Obamacare has run into difficulties massive enough to raise questions as to the viability of large-scale, government-run social entitlements, as Prohibition did to large-scale efforts at social control. Prohibition, like Obamacare, rested on a one-size-fits-all vision of social policy. It sought abruptly to change a deeply entrenched American drink culture, as Obamacre seeks to do to a system of group-based, privately insured health care that serves 80 to 85 percent of the population.

In a stunning instance of the private sector overwhelming the public one, a massive alternative infrastructure to Prohibition quickly arose: whiskey-running from abroad; illicit booze-making at home; a plenitude of speakeasies and bootleggers that made a mockery of Prohibition enforcement. The consequence was an endless ronde of popular, effective law-breaking and unpopular, ineffective law-enforcing. Finally, the unimaginable became inevitable: In 1933, the Eighteenth Amendment was repealed, and the regulation of alcohol returned to the states and localities.

Does Obamacare face a similar future? Probably not. Its enabling law does not rely on an amendment to the Constitution; it is more susceptible to modification and revision. Nor does it have the sectional, religious and cultural baggage that made Prohibition so potent a public issue. It may devolve into an adjunct to Medicare and Medicaid, as state and local liquor regulation survived the passing of Prohibition.

Obamacare's deeply flawed implementation speaks to a widespread problem in modern government. It took three and a half years for the United States to go from its hapless state of preparedness at the time of Pearl Harbor to victory in Europe and the cusp of victory over Japan. It took the same stretch of time to get from Obamacare's passage to its calamitous October 2013 rollout. But the defects of the Obamacare model go beyond this. From the early days after its 2010 passage to the present, it has been dogged by major problems of implementation. Delays, waivers, cancelled policies, and flawed patterns of enrollment persist with no end in sight: an echo of the ongoing problems of Prohibition.

There has been a flurry of speculation as to the impact of Obamacare on American liberalism and the active, centralized state. Is it, like the Vietnam and Iraq wars, a sign that the century of the warfare-welfare state is ending, to be replaced by some other model of governance? Are we witnessing a systemic failure of big government, or a more limited failure by the Obama Administration? Pundit Michael Gerson observes that “maybe the problem is not Obama or Sebelius but rather a government program that requires superhuman technocratic mastery.”

Similar concerns surround the Dodd-Frank law, which sought to bring the sprawling American financial sector of the economy under closer government control, and suffers from implementation problems resembling those of Obamacare. These experiences suggest that a large program unchecked by the discipline of the market, or a vigorous press, or political wisdom, may have a short life expectancy. Who in retrospect could have thought that replacing a health care program that more or less satisfactorily served more than 80 percent of the population, with one designed to better serve the other twenty percent, would thrive? The answer: most of the expert, educated, chattering class.

The Shame of the States and Cities

Yet another challenge to the modern state is the brouhaha over the unaffordable wage and retirement entitlements created by states and cities, and their consequences for taxes, deficits, and debt. Again, a historical perspective may be instructive. The current state-city situation has parallels, and some contrasts, with the American experience during the height of the party period more than a century ago. Political patronage then was difficult (often impossible) to distinguish from graft and corruption. Today, aid to the needy has evolved from relief (“alleviation, ease, or deliverance through the removal of pain, distress, oppression”) to welfare ("the provision of a minimal level of well-being and social support for all citizens”). Public employee wage, health, and pension provisions have similarly evolved from fringe benefits to entitlements. These are terms freighted with political meaning. They reflect the twentieth-century sea change in the role of government—just as the current backlash may become part of a larger reaction against the Progressive model of governance.

Contemporary state and city governments have features that Progressive critics a century ago would have found familiar. Public employee unions resemble the contractors and corporations of the past. They provide the money and manpower that elections require, and in return get power and perks from complaisant pols. Public employees enjoy superior wages and health care and pension advantages, as loyal voters in the past got jobs and help in time of need. Immigrant workers and their votes sustained boss-machine politics then; public sector workers, the black urban poor, and Hispanic newcomers play a similar role today.

The troubles afflicting state and local government feed a reaction in some respects reminiscent of the Progressive movement more than a century ago. It began in the cities and states of the Midwest, and then spread across the nation. With varying degrees of commitment, governors Robert La Follette in Wisconsin, Hiram Johnson in California, Theodore Roosevelt and Charles Evans Hughes in New York, and Woodrow Wilson in New Jersey were prominent Progressives. Four of them (La Follette, Teddy Roosevelt, Hughes, and Wilson) ran for President.

Today the same states have leaders focused on what to do about large-scale spending, overly influential unions, and long-term entitlements. Republicans Chris Christie in New Jersey and Scott Walker in Wisconsin have confronted public unions and their members’ entitlements. Democratic governors Jerry Brown of California and Andrew Cuomo in New York have wrestled with the same issues, although in a fashion that reflects their different party identity: more accommodating with the unions, more stress on tax increases.

The governors challenging the current system may be forerunners of what might be called an anti-Progressive movement; one as concerned with limiting or even reducing government power and largesse as its prototype a century ago was inclined to enhance it. Today's advocates of reform, however, are as yet far from securing so substantial a political presence, and indeed may never do so. Still, the echoes are there.

La Follette’s Wisconsin was the poster child of Progressive reform. Today, with governor Walker and Congressman Paul Ryan, it has been comparably conspicuous in the effort to roll back entitlement spending. Walker raised an especially contentious challenge to the state’s alliance of public sector unions and Democrats when he got the legislature to ban collective bargaining by state employees. He had to face a recall election in 2012, but won by a larger margin than in his initial election two years before. The new austerity, like the old Wisconsin progressivism, is far from coherent or fully defined. But, also like its predecessor, it strikes a popular chord.

California was the great success story of post-World War II America, and it became a showcase for the effects of large-scale public spending and ample public employee entitlements. What was special about the Golden State was the unusual importance of Progressive-era innovations in participatory government: the initiative and the referendum. These devices, originally intended to counter the power of party machines and corporations, turned out to be well-suited to a populist, post-party political culture—and to the growth of entitlements.

But the fiscal and policy cost of decades of growth in California's workforce and its perks became ever more evident. Highways and schools decayed; welfare costs and public worker benefits outstripped the revenue available to pay for them; business and income taxes rose steeply; growing numbers of businesses and middle-class citizens departed. California's budget crunch eased in 2013, but its long-term fiscal and economic prospects remain clouded.

Is Wisconsin or California a signpost for what is to come? Almost certainly, both are. Just as the Progressive movement took a variety of forms, reflecting the complex interplay of competing interests, so is that the case today. Private union members are not very sympathetic to public sector employees’ efforts to improve their (often superior) perks. Nor do private sector taxpayers, or currently employed state and city employees, necessarily worry about meeting the pension costs of already retired public workers. Public school teachers, college professors, policemen and firemen, and civil servants do not always have interests in common. And the fact that the financial fiscal woes of Democratic blue states outweigh those of Republican red ones may have unpredictable policy and political consequences.

Lincoln Steffens, the most prominent of the muckraker journalists of the Progressive era, focused national attention on the political corruption of his time. The Struggle for Self-Government (1906) described the boss-machine-business triad in six states. But his iconic work was The Shame of the Cities (1904), which described how a number of the largest American cities were (mis)governed. Like some states, many American cities now face out-of-control costs and entitlement commitments. Public employee pension plans (3,200 of them), health care, and salaries pose massive problems of taxation and debt. The unfunded liabilities of local pension plans were estimated to be more than $574 billion in 2012. California’s cities and towns have been the poster children of the unsustainable liabilities issue. More recently, Detroit became the largest American city to enter into bankruptcy.

Perhaps as a consequence, big-city mayors, like state governors, are some of the more interesting figures in American politics. They include now-Senator Cory Booker of Newark, Michael Nutter of Philadelphia, and new HUD head Julián Castro of San Antonio. Antonio Villaraigosa of Los Angeles, Michael Bloomberg of New York, and Rahm Emanuel of Chicago have had to wrestle with the inadequately funded pension and other costs of public employees. Bloomberg's successor Bill de Blasio hews to a pro-union, liberal-Left approach to the office, promising an interesting alternative case study in the city as a laboratory of democracy.

Do we stand on the cusp of a sea change in the character of the modern state? Are we, at the start of the twenty-first century, entering a shift comparable in its scale and impact to the rise of the welfare-warfare state of the twentieth? It would be temerity itself to make such a claim. But it would be no less so to dismiss the current stirrings as froth of the moment, not worth close attention.