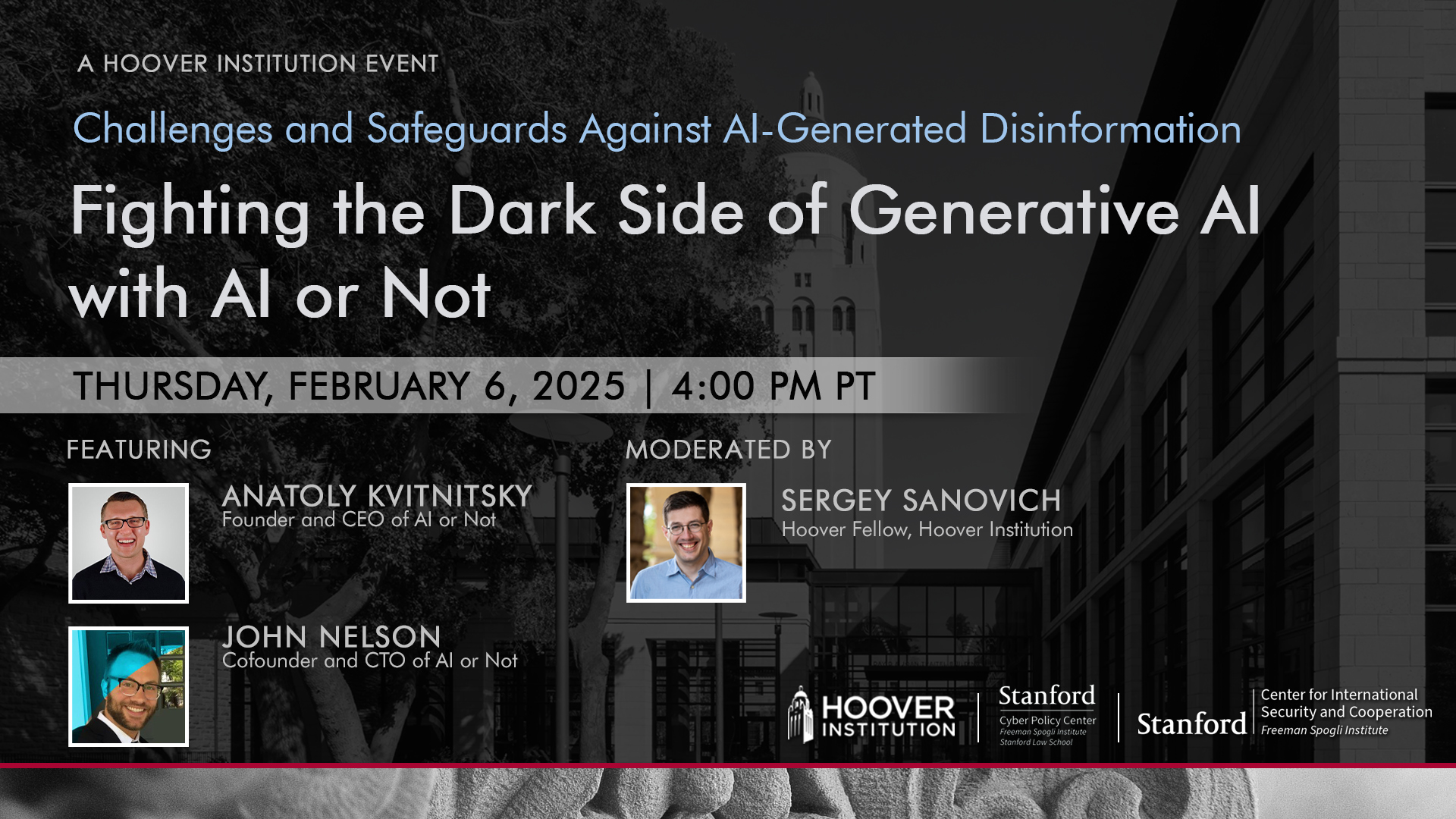

The sixth session of Challenges and Safeguards against AI-Generated Disinformation will discuss Fighting The Dark Side Of Generative AI With AI Or Not with Anatoly Kvitnitsky, John Nelson, and Sergey Sanovich on Thursday, February 6th, 2025 at 4:00 pm in HHMB 160, Herbert Hoover Memorial Building.

ABOUT THE SPEAKERS

Anatoly ‘Toly’ Kvitnitsky is the Founder and CEO of AI or Not, an AI detection startup helping organizations protect themselves against the risks of Generative AI. Previously, he was an investor at Amex Ventures, American Express’ venture arm. Prior to that, he was an early employee and senior executive at Trulioo, a unicorn in the KYC compliance industry. Anatoly holds an MBA from the USC Marshall School of Business and a BS from Rutgers University.

John ‘Johnny’ Nelson is the co-founder and CTO of AI or Not, an AI detection startup helping organizations protect themselves against the risks of Generative AI. Previously, John was a Senior Machine Learning engineer at LBRY, a leader in the decentralized publishing industry. He’s also done research for Cato Institute and started the Chordoma Research Foundation to fight against a rare form of cancer. John holds a PhD from George Mason University and BS from the University of Maryland.

Sergey Sanovich is a Hoover Fellow at the Hoover Institution. Before joining the Hoover Institution, Sergey Sanovich was a postdoctoral research associate at the Center for Information Technology Policy at Princeton University. Sanovich received his PhD in political science from New York University and continues his affiliation with its Center for Social Media and Politics. His research is focused on online censorship and propaganda by authoritarian regimes, including using AI, and Russian foreign policy, politics, and information warfare against Ukraine. His work has been published at the American Political Science Review, Comparative Politics, Research & Politics, and Big Data, and as a lead chapter in an edited volume on disinformation from Oxford University Press. Sanovich has also contributed to several policy reports, particularly focusing on protection from disinformation, including “Securing American Elections,” issued by the Stanford Cyber Policy Center at its launch.

ABOUT THE SERIES

Distinguishing between human- and AI-generated content is already an important enough problem in multiple domains – from social media moderation to education – that there is a quickly growing body of empirical research on AI detection and an equally quickly growing industry of its non/commercial applications. But will current tools survive the next generation of LLMs, including open models and those focused specifically on bypassing detection? What about the generation after that? Cutting-edge research, as well as presentations from leading industry professionals, in this series will clarify the limits of detection in the medium- and long-term and help identify the optimal points and types of policy intervention. This series is organized by Sergey Sanovich.