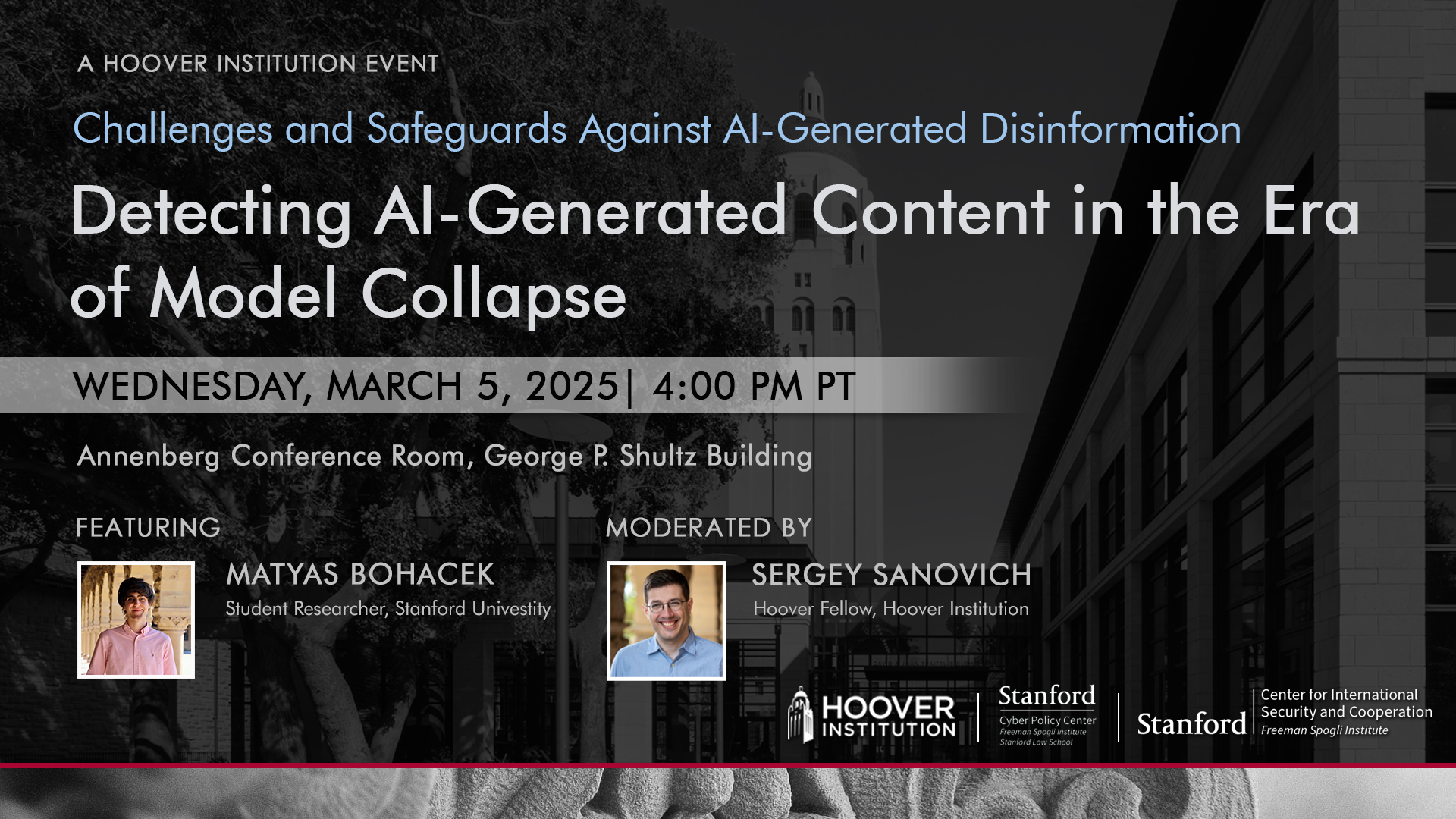

The eighth session of Challenges and Safeguards against AI-Generated Disinformation will discuss Detecting AI-Generated Content in the Era of Model Collapse with Matyas 'Maty' Bohacek and Sergey Sanovich on Wednesday, March 5th, 2025 at 4:00 pm in HHMB 160, Herbert Hoover Memorial Building.

Papers: Protecting world leaders from deep fakes | AI News Anchor | Model Collapse | Has an AI model been trained on your images?

ABOUT THE SPEAKERS

Matyas 'Maty' Bohacek is a student researcher at Stanford University advised by Professor Hany Farid. His research focuses on AI, computer vision, and media forensics. His recent work demonstrated that when generative AI models are trained on their own outputs, they collapse. His method for identifying whether an image was used in AI training enables audits of existing models and promotes more transparent, equitable AI development. Maty also built a deepfake detector for President Zelenskyy of Ukraine and created a deepfake CNN news anchor, which opened a primetime news segment to raise awareness of AI threats. Beyond media forensics, he developed an AI-powered sign language translator, now used in sign language classes at some US universities. His work has been featured in Forbes, CNN, Science, Nature, and the PNAS.

Sergey Sanovich is a Hoover Fellow at the Hoover Institution. Before joining the Hoover Institution, Sergey Sanovich was a postdoctoral research associate at the Center for Information Technology Policy at Princeton University. Sanovich received his PhD in political science from New York University and continues his affiliation with its Center for Social Media and Politics. His research is focused on online censorship and propaganda by authoritarian regimes, including using AI, and Russian foreign policy, politics, and information warfare against Ukraine. His work has been published at the American Political Science Review, Comparative Politics, Research & Politics, and Big Data, and as a lead chapter in an edited volume on disinformation from Oxford University Press. Sanovich has also contributed to several policy reports, particularly focusing on protection from disinformation, including “Securing American Elections,” issued by the Stanford Cyber Policy Center at its launch.

ABOUT THE SERIES

Distinguishing between human- and AI-generated content is already an important enough problem in multiple domains – from social media moderation to education – that there is a quickly growing body of empirical research on AI detection and an equally quickly growing industry of its non/commercial applications. But will current tools survive the next generation of LLMs, including open models and those focused specifically on bypassing detection? What about the generation after that? Cutting-edge research, as well as presentations from leading industry professionals, in this series will clarify the limits of detection in the medium- and long-term and help identify the optimal points and types of policy intervention. This series is organized by Sergey Sanovich.