- Security & Defense

- US Defense

- Terrorism

- Energy & Environment

You walk into your shower and find a spider. You are not an arachnologist. You do, however, know that one of the following options is possible:

The spider is real and harmless.

The spider is real and venomous.

Your next-door neighbor, who dislikes your noisy dog, has turned her personal surveillance spider (purchased from “Drones ‘R’ Us” for $49.95) loose and is monitoring it on her iPhone from her seat at a sports bar downtown. The pictures of you, undressed, are now being relayed on multiple screens during the break of an NFL game, to the mirth of the entire neighborhood.

Your business competitor has sent his drone assassin spider, which he purchased from a bankrupt military contractor, to take you out. Upon spotting you with its sensors, and before you have any time to weigh your options, the spider shoots a tiny needle into a vein in your left leg and takes a blood sample. As you beat a retreat out of the shower, your blood sample is being run on your competitor’s smartphone for a DNA match. The match is made against a DNA sample of you that is already on file at EVER.com (Everything about Everybody), an international database (with access available for $179.99). Once the match is confirmed (a matter of seconds), the assassin spider outruns you with incredible speed into your bedroom, pausing only long enough to dart another needle, this time containing a lethal dose of a synthetic, undetectable poison, into your bloodstream.

The assassin, on summer vacation in Provence, then withdraws his spider under the crack of your bedroom door and out of the house and presses its self-destruct button. No trace of the spider or the poison it carried will ever be found by law enforcement authorities.

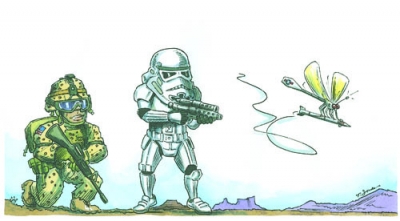

This is the future. According to some uncertain estimates, insect-sized drones will become operational by 2030. These drones will be able to not only conduct surveillance but also act on it with lethal effect. Over time, it is likely that miniaturized weapons platforms will evolve to be able to carry not merely the quantum of lethal material needed to execute individuals, but also weapons of mass destruction sufficient to kill thousands. Political scientist James Fearon even speculates that at some more distant time, individuals will be able to carry something akin to a nuclear device in their pockets.

Assessing the full potential of technology requires a scientific expertise beyond my ken. The spider in the shower is merely an inkling of what probably lies in store. But even a cursory glance at ongoing projects shows us the mind-bending speed at which robotics and nanobotics are developing. A whole range of weapons are growing smaller, cheaper, and easier to produce, operate, and deploy from great distances. If the mise-en-scène above seems unduly alarmist or too futuristic, consider the following: drones the size of a cereal box are already widely available, can be controlled by an untrained user with an iPhone, cost roughly $300, and come equipped with cameras. Palm-sized drones are commercially available as toys, although they are not quite insect-sized and their sensory input is limited to primitive perception of light and sound.

TOYS NO LONGER

True minidrones are in the developmental stages and the technology is progressing quickly. The technological challenges seem to be not in making the minidrones fly but in making them do so for long periods while also carrying some payload (surveillance or lethal capacity). The flagship effort appears to be the Micro Autonomous Systems and Technology (MAST) alliance, which is funded by the Army and led by BAE Systems and UC-Berkeley, among others. The alliance’s most recent creations are the Octoroach and the BOLT (Bipedal Ornithopter for Locomotion Transitioning). The Octoroach is an extremely small robot with a camera and radio transmitter that can cover up to a hundred meters on the ground. The BOLT is a winged robot that can also scuttle about on the ground to conserve energy.

Scientists at Cornell University, meanwhile, recently developed a hand-sized drone that uses flapping wings to hover, although its stability is still limited and battery weight remains a problem. A highly significant element of the Cornell effort, however, is that the wing components were made with a 3D printer. Three-dimensional printers are already in use. This heralds a not-too-distant future in which a person can simply download the design of a drone at home, print many of the component parts, assemble them with a camera, transmitter, battery, and so on, and build a fully functioning, insect-sized surveillance device.

Crawling minidrones are clearly feasible, merely awaiting improvements in range and speed to be put to work on the battlefield and in the private sector. Swarms of minidrones are being developed to operate with a unified goal in diffuse command and control structures. Robotics researchers at the University of Pennsylvania recently released a video of what they call “nano quadrotors”—flying mini-helicopter robots that engage in complex movements and pattern formation.

Nanobots or nanodrones are still more futuristic. Technology for manufacturing microscopic robots has been around for a few years, but recent research has advanced to microscopic robots assembling themselves and even performing basic tasks. The robotics industry, both governmental and private, is working hard to enhance autonomous capabilities, that is, to be able to program a robot to perform complex tasks with only a few initial commands and no continuous control. Human testing for a microrobot that can be injected into the eye to perform certain surgical tasks is now on the horizon. Similar developments have been made toward nanobots that will clear blocked arteries and perform other procedures.

Now put the robotics technology alongside other scientific advancements—the Internet, telecommunications, and biological engineering—all of which empower individuals to do both good and terrible things to others. From here, it is not hard to imagine a world rife with miniature, possibly molecule-sized, means of inflicting harm on others from great distances and under a cloak of secrecy.

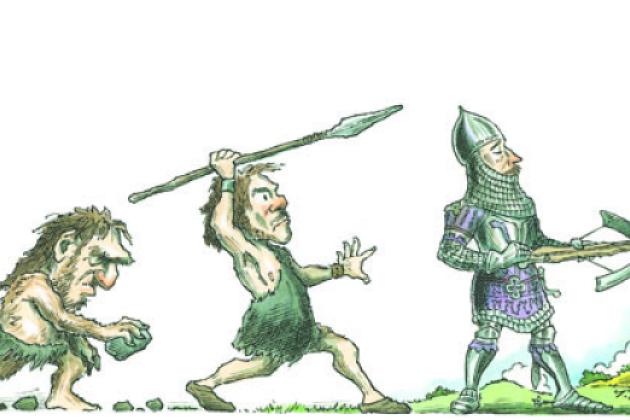

AN END TO MONOPOLIES ON VIOLENCE

When invisible, remote weapons become ubiquitous, neither national boundaries nor the lock on our door will guarantee us an effective defense. When the risk of being detected or held accountable diminishes, inhibitions toward violence decrease. Whether political or criminal, violence of every kind becomes easier to inflict and harder to prevent or account for. Ultimately, modern technology makes individuals at once vulnerable and threatening to all other individuals to an unprecedented degree: we are all vulnerable, and all menacing.

Three special features of new weapons technologies seem likely to make violence more possible and more attractive: proliferation, remoteness, and concealment. The technologies essentially generate a more level playing field among individuals, groups, and states.

Proliferation leads to a democratization of threat. Countries can generally be monitored, deterred, and bargained with, but individuals and small groups are much harder to police, especially on a global scale. If governmental monopolies over explosives, guns, or computer technologies have proven nearly impossible to enforce, how much harder will it be to control deadly minidrones, including homemade weapons, that are secretly built and untraceable?

The violence-exacerbating feature of remoteness, meanwhile, creates a mental distance between the attacker and his victim. When the body is out of harm’s way but the mind is engaged in a playlike environment of control panels and targets moving onscreen, killing can quickly turn into a game.

Any hope that this level playing field of the future will be a bloodless contest of robots fighting one another, rather than robots fighting humans, is likely to be in vain. If a war between robots, whether miniaturized spiders or large Reaper drones, were possible, all wars could be resolved through video games or duels. But wars are ultimately fought between humans, and to matter, they need to hurt humans. The fewer humans available to hurt on the battlefield, the more humans outside the battlefield will be subjected to the suffering and death demanded by war.

Much of our political, strategic, and legal frameworks for dealing with violence assumes that a violent act can be attributed to its source and that this source can be held accountable—through a court of law or retaliatory strikes. If defenses against invisible threats such as microrobots and cyberattacks fail to keep up with attackers’ skills, violent actors will no longer be inhibited by the risk of exposure. Safely concealed, they will have a free pass for killing.

A NIGHTMARE DEFERRED?

Most technological innovations in weapons, biology, or the cyber world are closely followed by apprehensions of Armageddon—so much so that in some cases people make pre-emptive efforts to ban these technologies. Take, for instance, the 1899 treaty to ban balloon air warfare, which is still in force for its signatories (among them the United States). Genetic and biological engineering, which can save and improve lives on a mass scale, also has been met with criticism about the impropriety of “man playing God” and predictions of the end of the human race. And yet the world still stands, despite the existence of destructive capabilities that can blow up planet Earth hundreds of times over. In fact, some credit the very existence of nuclear weapons and the accompanying nuclear arms race with a reduction in overall violence.

Still, history has proven that offensive capabilities, at least for a time, usually outrun defensive capabilities. In the robotic context especially, as costs go down, availability grows, and the global threat grows with it. Even if defensive technologies catch up with present threats and many of the concerns raised here could be set aside, it is always useful to continue thinking about defense as it should evolve in the micro-world. Moreover, it is unclear that meeting threats with equal threats—as in the case of nuclear weapons—would yield a similar outcome of mutual deterrence when it comes to personalized weapons of the kind I imagine.

We should also note that robots can be put to good or bad use, and the good or bad often depends on where one stands. On today’s battlefields, robots serve many functions, ranging from sweeping for improvised explosive devices (IEDs), to medical evacuation, supply chain management, surveillance, targeting, and more. Technology has life-saving as well as life-taking functions. But the same systems that can save victims of atrocities can easily be deployed for pernicious purposes.

The Internet, telecommunications, travel, and commerce have all made the world smaller and strengthened global interconnectedness and socialization. In a recent eloquent and wide-ranging book, Harvard psychologist Steven Pinker argues that our society is the least violent in recorded history, in part because technology, trade, and globalization have made us more reasoned, and in turn more averse, to violence. Notwithstanding Pinker’s powerful account, the same technology that brings people closer—computers, telecommunications, robotics, biological engineering, and so on—now also threatens to enable people to do infinitely greater harm to each other.

The threat from technological advances is only one manifestation of a sea change in social and cultural relations that technology as a whole brings about. Technology influences both capabilities and motivations, but not in a single trajectory; the technology to inflict harm is countered by the technology to prevent or correct against it. Motivations to inflict harm are constantly shaped and reshaped by the local and global environments. So any assessment of new weapons must reckon with both the growing capability to inflict violence and the growth or decline in motivations to do so.

If it turns out that political, ideological, or personal motivations for harm diminish—for instance, because interconnectivity and interdependence reduce violent hatred, demonization of others, or the attractiveness of violence as a means to promote ideological goals—we have less to worry about. But it seems to me that the lethal capabilities of miniaturized technologies will spread before pacifying social forces, whatever their nature, can turn more of our world into a peaceful place.

Violence, of course, does not require fancy weapons. Even in our present age, it took little more than machetes for Hutus to kill 800,000 Tutsis and moderate Hutus in a mere hundred days. When people want to kill other people, they can. But having simpler means to do so might tip the scale.