As artificial intelligence (AI) moves into the mainstream, the possibilities it creates—both positive and nefarious—are seemingly limitless.

But the growth of AI capabilities comes with risk, from foolish generative fakery swaying elections to the creation of an all-powerful artificial general intelligence (AGI) that could threaten humanity itself, with countless other challenges emerging that fall between those two extremes.

That’s why policymakers must develop robust, flexible, and competent agencies to confront these challenges and ensure safe use of AI.

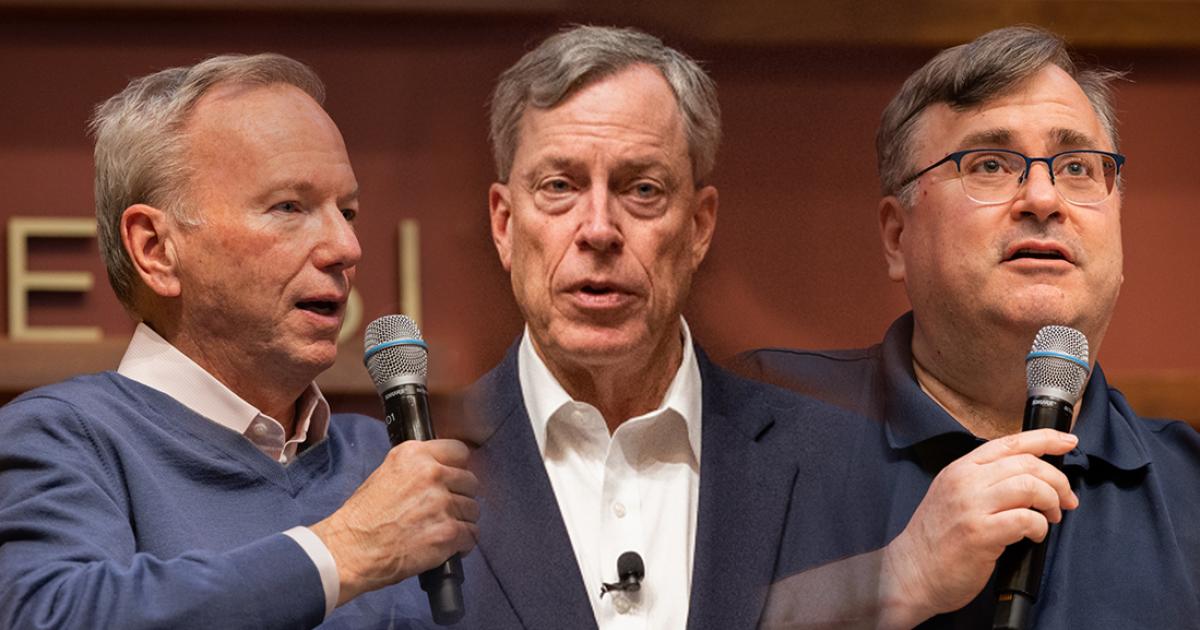

So the Hoover Institution and Stanford’s Institute for Human-Centered Artificial Intelligence (HAI) organized a conference on April 15 that saw scholars, newly minted AI regulators, and titans of Silicon Valley discuss how nations will go about dealing with the profound unknowns that come with continued artificial intelligence expansion. The first day of the conference, at Hoover, focused principally on catastrophic risks and how governments are learning to evaluate frontier models. On April 16, Stanford HAI hosted a symposium, looking broadly at issues of AI and society, featuring speakers from around the world.

Throughout the afternoon-long discussion at Hoover, one thing became ominously clear: in most jurisdictions across the globe, policy development is lagging far behind the progress of leading AI models.

Marietje Schaake, international policy fellow at HAI and former member of the European Parliament, told attendees that, before anything else, nations with robust AI development must develop a regulatory framework on its use.

The first law of its kind, the EU AI Act, is set to come into force later in 2024, with up to two years for firms developing AI models to come into compliance.

She said this act gives the EU “first mover advantage” as the first entity codifying actual laws to govern the use of AI, but there are major blind spots policymakers also must consider.

Schaake reminded everyone that only thirty-five of the roughly two hundred countries on earth currently have the computing power to develop large language models.

A Non-Proliferation Act for Artificial General Intelligence

Since neither the EU's AI Act nor any pending U.S. legislation has figured out how to address possible catastrophic risks in new models, Hoover senior fellow Philip Zelikow said there are a number of pathways to consider, but timing is key.

“If we’re going to do gigantic stuff to manage catastrophic risk, here’s a deep insight: doing it before is better,” Zelikow said.

Zelikow then focused on the accomplishments so far. There is now an international scientific panel summarizing evidence on the dangers. There are now, led by the British, AI safety institutes in the UK, the U.S., and Japan and they are learning how to evaluate models and define best practices. As those practices become clear, companies must take them into account if they wish to manage enormous liability risks that go with the deployment of their products.

Further, Zelikow stressed that research into AI's dangers was necessary, to be able to prepare for its possible misuse. That security requirement will force government to sponsor dangerous research, but then also set standards for how to conduct it.

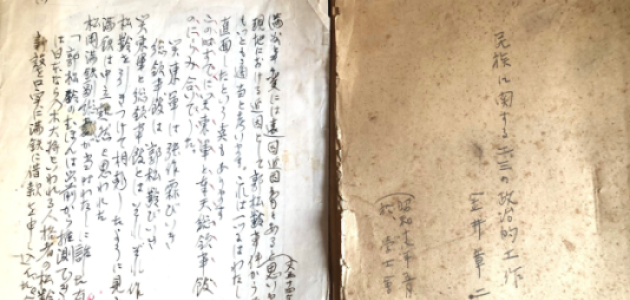

Reminding the audience about the danger of misuse, Zelikow described how during a period of détente in the Cold War, the Soviet Union signed the 1972 Biological Weapons Convention banning such weapons. Yet it still pursued one of the largest biological weapons research programs ever assembled.

He said this experience, where it took US intelligence nearly twenty years to prove the Soviets were secretly and grossly violating the convention, worries him when it comes to pursuing bad actors deploying AI.

“We have to evaluate not just what all the companies are doing, but what are the worst people on earth planning to do,” Zelikow said.

Yoshua Bengio of the University of Montreal and the Quebec AI Institute was one of three people to share in the 2018 Turing Prize for work on the neural networks that will pave the way for advances in AI.

Bengio said that his biggest worry is the competition over the next decade to develop the first working artificial general intelligence (AGI), a type of artificial intelligence that can perform a wide range of cognitive tasks as well or better than humans.

“As we increase the number of AGI projects in the world, the probability of existential risk increases,” he said, adding that it is not guaranteed an AGI would not turn against humanity once it emerged.

“We need a non-proliferation act for AGIs,” Bengio suggested.

Meeting the AI Sheriffs

For its part, the United States is far from drafting a similar regulatory framework as in the European Union. The US Artificial Intelligence Safety Institute, a government entity established on executive order by the Biden administration to set standards for AI safety, is only a few months old.

The institute's chief, Elizabeth Kelly, told attendees that the federal government is already using AI to perform nearly seven hundred functions, from expediting approval of court documents to helping organize crop data.

She said her agency, housed within the National Institute for Standards and Technology, will eventually test all AI applications before they are released for public use.

Kelly also discussed how China is facing some of the same challenges stemming from the rapid emergence of AI technology, specifically AI’s ability to make cybercrime, cyberwarfare, and biological weapons development easier.

Making biological weapons development easier was a concern shared by representatives of the United Kingdom’s AI Safety Institute, whose deputy minister, director, and chief technology officer spoke at the conference.

Coaching a Novice into Doing Something Dangerous

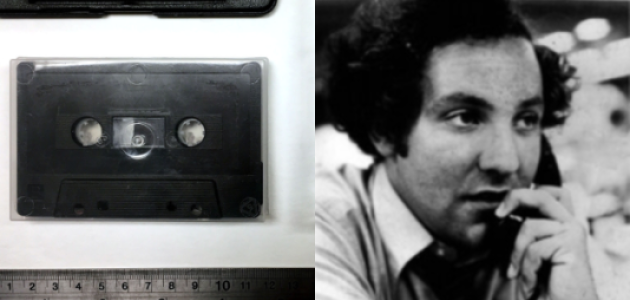

AI Safety Institute chief technology officer Jade Leung spoke of a series of tests her organization is undertaking to see how generative AI can enable someone with only a novice understanding of subject matter to become an expert.

In some instances, AI can “coach a novice through complex biological or chemical processes, acting like a lab supervisor,” Leung said.

“If you get stuck as a novice, you’re going to have to Google around and probably stay stuck,” she said. “But if you have access to (a generative AI) model it’s going to give you specific, tailored coaching that may unstick you.”

There is a belief among AI experts that advanced generative AI can “boost” the capabilities of individuals with only entry-level knowledge of a dangerous topic such as explosives development or biochemistry to the level at which they can produce dangerous substances by themselves.

She said her group is preparing an exercise that will test whether “an AI can coach a novice in a wet lab into making dangerous bioagents.”

UK Deputy Minister for AI Henry De Zoete said the AI Safety Institute has already secured £100 million (pounds sterling) in government funding, many times more than what the US Congress has authorized for its AI safety entity, and is working to build more than £1 billion worth (equivalent to $1.25 billion) of computing power that can be used to test and evaluate existing large language models.

For the final presentation of the conference, former Google chairman Eric Schmidt and LinkedIn cofounder Reid Hoffman discussed what the future of AI may look like.

Schmidt gave examples of how the growing power of generative AI will lead to scenarios that we can scarcely comprehend today.

Schmidt told the audience to imagine the results if the United States successfully bans the social media app TikTok. A sufficiently advanced AI could be asked by a user to generate a new, wholly independent copy of that app, properly coded and all, and then release it online in various ways in a bid to make it go viral, he said. How could any government ensure the app stayed banned in that scenario?

“My point here is: I don’t think we have any model for human productivity of the kind I am describing—of everyone having their own programmer who actually does what we want,” Schmidt said.

For his part, Hoffman said that while we’ll continue to see successes and breakthroughs by AI firms both big and small, the future he envisions may see the AI marketplace consolidate into only four or five big players. He said there is a chance all four or five companies may be located in only one or two countries.

“The path is a very small number of very powerful computers,” Hoffman said, “Where does that leave the rest of the world?”