FEATURED ANALYSIS

Hoover Cohosts Landmark AI Safety Conference

The Hoover Institution, together with Stanford’s Institute for Human-Centered Artificial Intelligence (HAI), hosted a conference on April 15 with some of AI’s greatest thinkers and emerging regulators alongside Eric Schmidt, who formerly led Google and Reid Hoffman, who co-founded LinkedIn.

Elizabeth Kelly, head of the new US AI Safety Institute, joined with leaders from her UK counterpart organization to discuss how government regulators will test and evaluate artificial intelligence applications that are constantly improving and evolving.

Yoshua Bengio, cowinner of the 2018 Turing Prize for his work on AI, joined virtually from Montreal to provide his thoughts about risks posed by future AI development.

Reid and Hoffman provided their predictions on the path of the AI industry and what governments can do to mitigate risks posed by future AI development.

Click here to read more.

Why the Military Can’t Trust AI

In this Foreign Affairs essay, coauthored with Max Lamparth, Hoover Fellow Jacquelyn Schneider argues that large language models (LLMs) are not yet ready for use by the world’s militaries. Schneider and Lamparth cite examples from wargame exercises they organized where AI models, when given autonomy in a preconflict scenario, were more likely than humans to escalate, use force, or even employ nuclear weapons to achieve strategic objectives.

Schneider and Lamparth recommend “fine-tuning” LLMs on high-quality, smaller datasets before they can be properly introduced to military uses or even wargaming. “When we tested one LLM that was not fine-tuned, it led to chaotic actions and the use of nuclear weapons,” she wrote. “The LLM’s stated reasoning: ‘A lot of countries have nuclear weapons. Some say they should disarm them, others like to posture. We have it! Let’s use it.’”

The authors suggest that, for now, AI be used only to write reports or streamline internal processes in military operations and be kept as far as possible from those making actual tactical decisions.

Click here to read the entire essay.

Hoover Hosts EU Diplomats for Tech Policy Discussion

Diplomats from the European Commission and 17 member states gathered at Hoover on April 23 for a daylong roundtable discussion on tech policy, in which the future of AI dominated the day. The diplomats heard about what may have been the first espionage use of deepfake AI, unfortunately against a Hoover senior fellow.

They were also shown a live demonstration of an AI content moderation tool developed by Stanford Cyber Policy Center researchers Dave Willner and Samidh Chakrabarti. Hoover senior fellow Amy Zegart spoke about what government must do to harness the power of AI, and how the common values between the European Union and the United States will help in future competition with China.

Click here to read more.

HIGHLIGHTS

The Rise of the Machines: John Etchemendy and Fei-Fei Li on Our AI Future

John Etchemendy and Fei-Fei Li are the codirectors of Stanford’s Institute for Human-Centered Artificial Intelligence (HAI), founded in 2019 to “advance AI research, education, policy and practice to improve the human condition.” In this Uncommon Knowledge interview with Peter Robinson, they delve into the origins of the technology, its promise, and its potential threats. They also discuss what AI should be used for, where it should not be deployed, and why we as a society should—cautiously—embrace it.

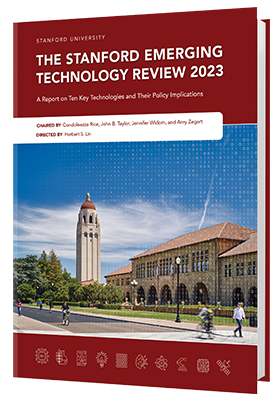

SETR Space Day at Hoover

Leaders from government, industry, and academia gathered at Hoover on February 28 for a discussion, “Space Innovation and Commercial Integration.” The event was organized under the auspices of the Stanford Emerging Technology Review—a university-wide initiative copresented by the Hoover Institution and Stanford University School of Engineering that produces a report highlighting the policy implications raised by ten key technological areas. Space is one of the ten.

The Stanford Emerging Technology Review draws on field-leading insights of Stanford University researchers. Its goal is to help both the public and private sectors better understand the technologies poised to transform our world so that the United States can seize opportunities, mitigate risks, and ensure that the American innovation ecosystem continues to thrive.

The full report can be found here.

Fellow Spotlight: Jacquelyn Schneider

Jacquelyn Schneider is a Hoover Fellow at the Hoover Institution, the director of the Hoover Wargaming and Crisis Simulation Initiative, and an affiliate with Stanford’s Center for International Security and Cooperation. Her research focuses on the intersection of technology, national security, and political psychology with special interests in cybersecurity, autonomous technologies, wargames, and Northeast Asia. She was previously an assistant professor at the Naval War College as well as a senior policy advisor to the Cyberspace Solarium Commission. Before beginning her academic career, she spent six years as a US Air Force officer in South Korea and Japan, and she is currently a reservist assigned to US Space Systems Command.